A new study is speaking about the impact of Large Language Models and how bigger sizes can compromise performance.

A team of researchers in Spain shed light on how the popular LLMs are growing bigger and getting more sophisticated. But that does come at the cost of compromised performance and a failure to admit the truth. By that, we mean not being able to confirm that they don’t know the answer to a prompt.

In this research that was published in the scientific journal Nature, the group tested the newest version of the three most popular AI chatbots and what their responses on this front would be. This includes accuracy and how good the users would be at highlighting wrong replies.

As these models get more mainstream with time, users are now using them more frequently as writing tools for research papers, song tracks, and even poems. This includes solving math equations and conducting bigger tasks. Therefore, the problem of accuracy arises and that problem is expanding.

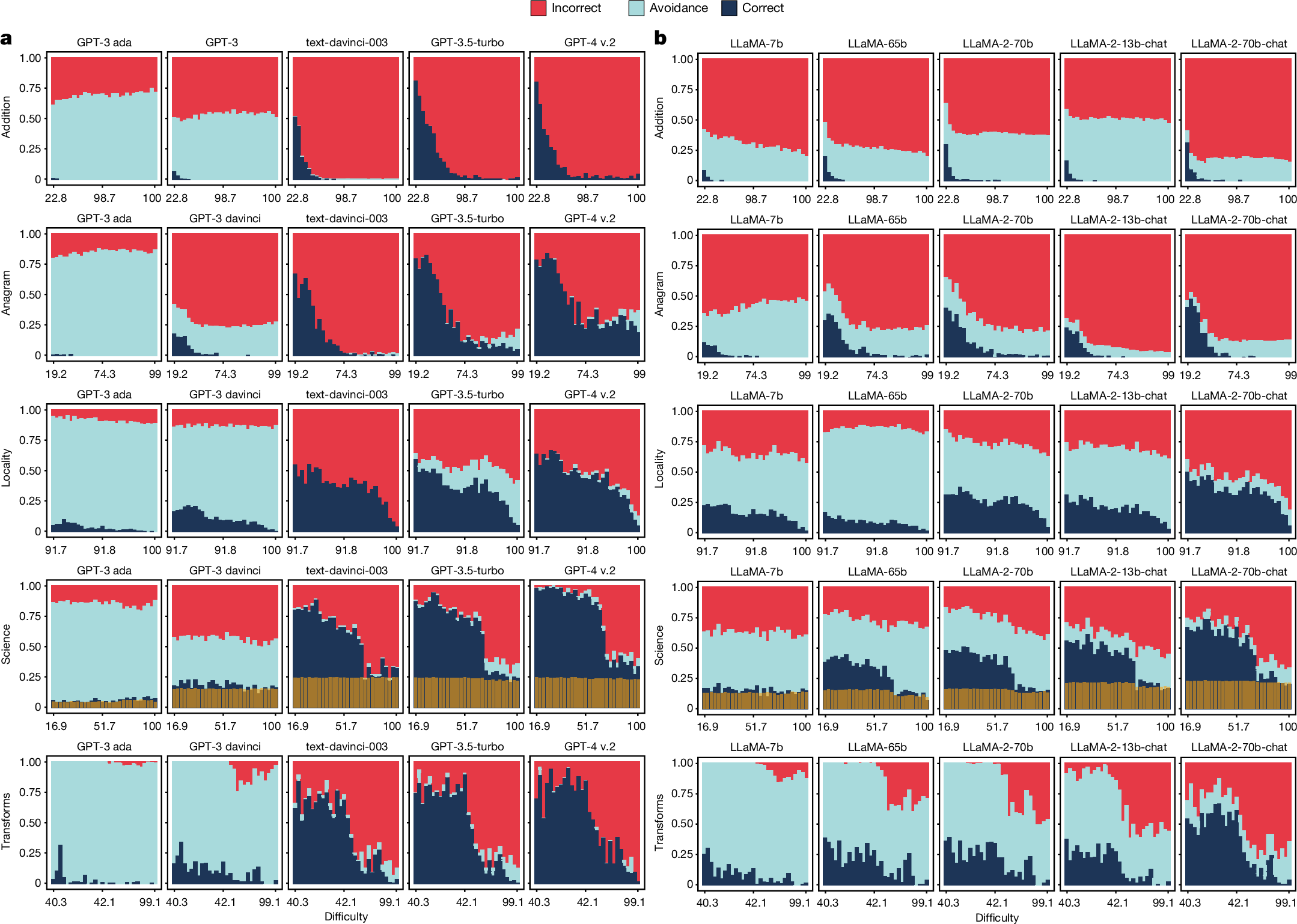

The latest research spoke about whether bigger LLMs are actually better with the latest updates and what the tool would do if they’re not right. Therefore, to test just how accurate it is, three of the popular LLMs including GPT, BLOOM, and LLaMA were included in this list. They were prompted with so many questions. After that, the results were compared to those from the past when similar queries were raised.

They also varied in terms of themes such as math, geography, and also anagrams. After that, the authors also assessed their abilities to produce text or perform certain actions like ordering lists. For such queries, they were assigned different difficulty levels.

With every new iteration of the model, accuracy increased as a whole. But as the difficulty levels for the queries increased, accuracy went downhill. With time, it was found that these LLMs were growing larger and getting more sophisticated. And when that arose, they were less open about admitting when they were wrong or didn’t know the answer.

In the earlier variants, most of the models would reply by informing users that they couldn’t find a reply or required more detailed information. In the latest variants, these LLMs were also more likely to make guesses and that again raised questions about accuracy.

Occasionally, the authors found that the models rolled out inaccurate replies to some of the simplest queries. They suggested that they were not reliable. When the researchers asked the volunteers to further rate replies from the study’s first part as right or wrong, most struggled to find the wrong replies.

So as you can see, LLMs getting bigger does not always mean a good thing. When a model cannot perform basic tasks or fails to admit its errors, we might have a serious problem here.

Read next: New Survey Says Elon Musk’s X Platform Is The Least Trustworthy Company In The Tech World